Introduction

This post, I’m afraid, will be about frustration. Specifically, how Excel ate a day of my life, and project timeline, and project budget. And also how Excel added yet more grey to my head (oh well, at least I have hair). Read on, gentle reader, and yr. obdnt. srvnt. will show you how to avoid Excel driving you nuts.

Introduction to the introduction

My saga of frustration, wonder, and pain all came about because of a simple request to load data from an Excel workbook into Essbase. A lock and send (this was to a BSO cube) is amongst the most basic tasks we can do with Essbase. And I managed to get it wrong. Sigh. Some days are just like that, right? But the strange thing was that I tried really, really, really hard not to get that send of data wrong. In one of those cruel and ironic twists of fate, that very act of trying to get it right is what doomed me. Like I wrote, it was just one of those days.

Some Excel functionality you may not be aware of

Just about everyone knows how linked Excel formulas work. For the one or two of you who do not, here’s a recap of this supposedly straightforward process. Don’t be put off by the simplicity of the examples – it illustrates the issue quite nicely.

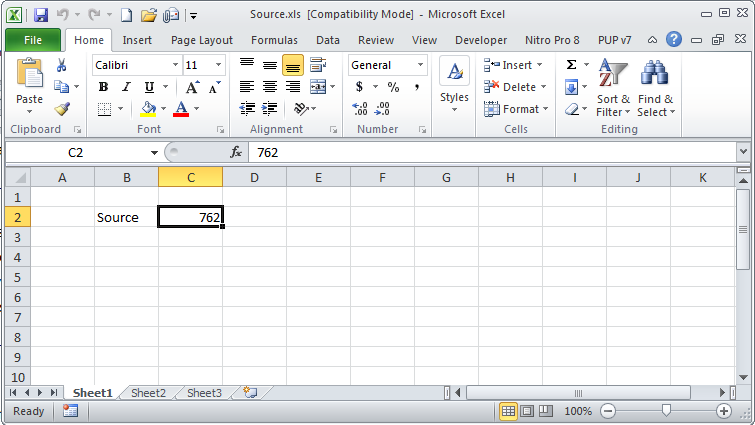

Step 1 – create a source workbook

Easy peasy, lemon squeezy. This is about as simple as a file can get with a single cell of data and a label to identify it. Oh, Source.xls, I love thee.

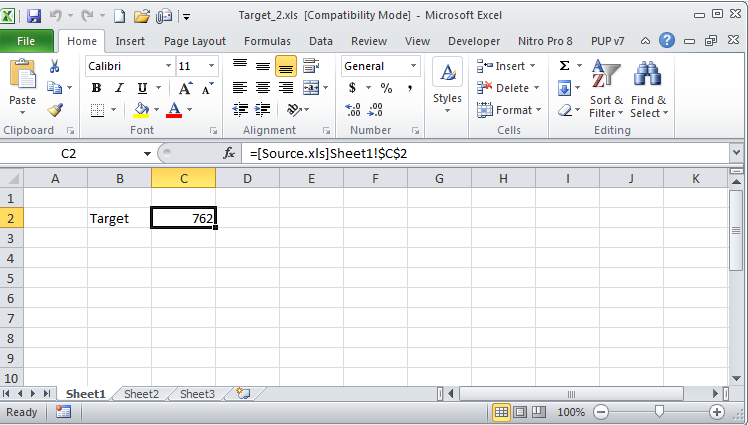

Step 2 – Create a target workbook

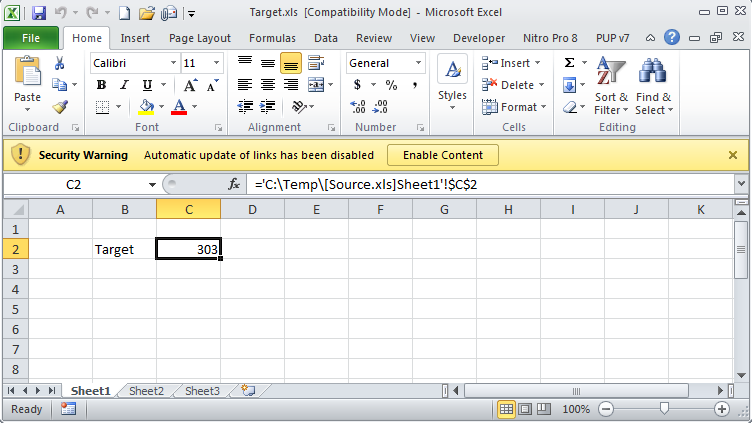

It couldn’t be simpler, right? Create a formula and link cell C2 in Target.xls to C2 in Source.xls. Oh Target.xls, I love thee too.

Step 3 – Create a second target workbook

Huh? Why would anyone do this? Well, in my case because multiple workbooks linked to the same source file is highly germane to this Frustrating Excel “feature” so please humor me. And really, it isn’t that unreasonable to have multiple target workbooks linked to the same file. So Target_2.xls, love is still in the air.

Now we have three files, all with the 762 value. What oh what oh what could the big deal be?

Step 4 – Close one of the linked workbooks

This couldn’t be easier – simply close Target_2.xls. Now there are two files: Source.xls and Target.xls open, both still with a value of 762 in cell C2.

Pay attention, pay attention, pay attention

This is important: Target_2.xls also has a value of 762 in cell C2 as linked data in Excel is stored in two ways, both as a formula link and as a local value. I know that’s confusing, but that feature is how Excel can display information in target workbooks when the source isn’t available.

Stick with me folks, it is about to get very interesting.

Step 5 – Change the value in the source workbook

In Source.xls, change cell C2’s value from 762 to 303. Now both Source.xls and Target.xls have a value of 303 in cell C2.

And of course Target.xls changes as well as the link is live between the two workbooks. All is as it should be, right?

Step 6 – Close both workbooks

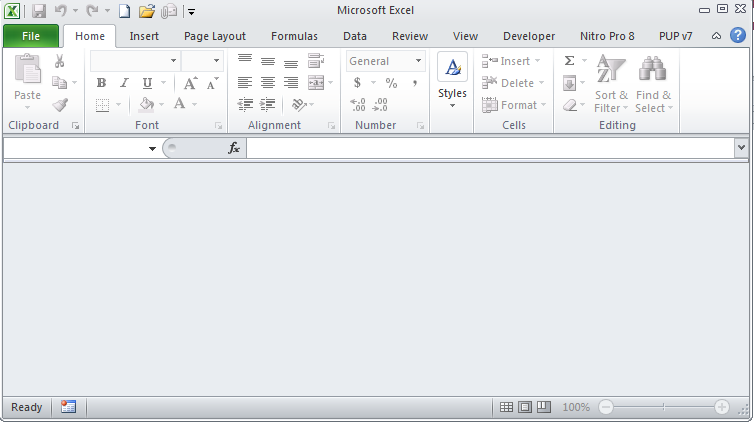

By closing both Source.xls and Target.xls I now have Excel with absolutely nothing open.

If I were to think about the values in the workbooks, they would be as follows:

Workbook name

|

C2 value

|

Source.xls

|

303

|

Target.xls

|

303

|

Target_2.xls

|

762

|

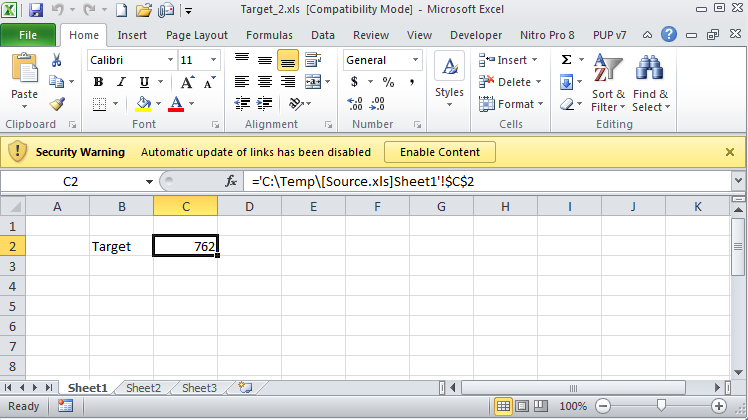

Step 7 – Open Target_2.xls

When I open Target_2.xls, I see that the old value of 762 is still in C2. And that an automatic update of links has been disabled. Thank you, Excel 2010, for not exposing me to Bad Things. Alas, not the Bad Thing I am about to (finally) demonstrate.

Step 8 – Open Target.xls

Before I do this, a quick quiz. Yes, it’s Professor Lackpour and I’m here to make your life a living Excel-themed hell. What value do you think will be in Target.xls?

Did you answer 303? Good. That is consistent with what we saw with Target_2.xls – the last linked value (note again that automatic link updates are disabled) displays.

Now the trick question: What value do you think is in Target_2.xls?

Step 9 – The Stupid Excel Trick

Would you believe not the value we saw a moment ago?

Well, you should, because THE VALUE HAS CHANGED.

Let me repeat the steps:

- I opened Target_2.xls. Its link-disabled C2 cell value was 762.

- I opened Target.xls. Its link-disabled C2 cell value was (and is) 303.

- I toggled worksheets (both are in memory, I simply Ctrl-Tab switched to Target_2.xls) back to Target_2.xls. What value did I find in cell C2? Not 762, but 303.

- Insert three hours of dumbfounded, flustered, and frustrated experimentation trying to prove that what I saw couldn’t possibly be so.

What I saw, and what you now see, in fact is possible. Not only possible, but documented behavior. And we geeks complain about Oracle not fixing bugs in a timely manner. This one is a whopper and Oracle Corporation, Inc.’s fingerprints are nowhere to be found.

Excel, I HATE you.

Explaining the Stupid Excel Trick

A slight exercise of google-fu brings up this Microsoft support document: Multiple workbooks with links to the same source update each other

At your own discretion, throw in a, “You bastard!” interjection when you read the article; repeat as necessary. Those were at least my exact words.

Let me direct you to the really interesting bit with emphasis added by yr. obdnt. srvnt.:

If you open multiple Excel workbooks that all contain links to the same source workbook, and you choose not to update the links, the links in all of the workbooks will be updated to the values that are stored in the last workbook that was opened.

And this other interesting bit, again with a bit of emphasis:

This behavior occurs because link information is stored in an internal link table. This link table is shared among all open workbooks in that instance of Excel. The most recently opened workbook provides the link table that is in memory at any specific time.

There is a workaround, although not one that anyone who uses the Essbase Excel add-in is likely to use:

To work around to this behavior, open the workbooks in different instances of Excel. As a result, each workbook maintains its own internal link table and stored values.

So just to summarize: Multiple target Excel workbooks that link to the same closed source workbook will reflect the data value in the last opened target workbook.

Some would call this a data integrity problem.

Tim Tow would tell you that’s why Excel is so dangerous. I agree.

Explaining what happened to me, and potentially you

I received an updated data workbook via email. This updated workbook had new values that were linked to multiple source workbooks that I did not have access to.

To be sure (oh, the irony) that I was dealing with the right data set, I opened an older, differently named, version of the workbook.

As both the first and the second workbook were linked to the same closed (and not even available) source files, the first workbook’s linked data values were updated by Excel to the second workbook’s linked data values.

I then sent the data values in the first workbook (which were now wrong because they reflected the values in the second workbook) into Essbase.

Thank you, Microsoft Excel.

Arrrgh. But one must be philosophical about things like this lest they drive you mad. It’s an Excel issue, not an Essbase issue, and not even a Cameron issue (well, mostly).

I don’t think I can say it better than my friend Dave Farnsworth, “Linked workbooks are nothing but trouble and always have been. Shoot anyone who uses them and save yourself the pain. No one who knows Excel will vote to convict.”

Be seeing you.