Prequel to the cool stuff

So in part 1, I reviewed how to create a HBR, substitute in local variables to read a Planning form’s Run Time Prompts (RTP), and suggested that it might be worthwhile to read this post.

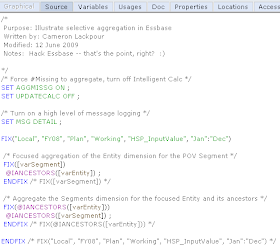

The code sample I used doesn’t do any fancy allocation or calculation. It just aggregates the database using form POV members.

You may be scratching your head, thinking, “Why on earth is he banging on and on about this? Just issue an AGG(“Entity”, “Segments”) statement and be done with it.”

Zoiks! If I wrote one line of code to consolidate a database I would be out of a blog post topic – This Is A Bad Thing.

Secondly, Why Would I Want To Do That? (Ex-Comshare employees/customers/partners know of whom I speak when I write that.)

Because thirdly, and most importantly to you, I can make that database aggregate much, much more quickly.

The Trouble with AGG

Other than sounding like a misquote from a sketch in “Monty Python and the Holy Grail”, what is wrong with an “AGG” when applied to the Entity and Segments dimensions?

Think about it in the context of the form as shown below:

But if I wrote:

AGG(“Entity”, “Segments”) ;

I would be also be aggregating a New England state and a totally obsolete electronic media, if there was any Gross Profit data for those two members. After all, AGG is kind of a blunt axe.

(For you Intelligent Calc lovers out there, yes, I could use the blocks’ clean or dirty status to not calculate upper level data that already exists, but good luck doing that in a real world Planning application. There will be clean/dirty blocks all over the place and it is very difficult to keep this approach from going pear shaped.)

Having disposed of the Intelligent Calc option, while an AGG statement will certainly come up with the right result, Why Would You Want To Do That when you only changed data at the PA and DVD Recorders intersection? Why aggregate data combinations for all of the other level zero Entity and Segment combinations that haven’t even changed?

(These questions do have a point, so bear with me.) You might answer, what else am I to do? How do I consolidate my dimensions if I don’t use AGG or CALC DIM?

Tricky, innit?

Here’s the trick – you don’t need to calculate the dimension, you only need to calculate the relevant hierarchies. That’s what the calc script above shows and what your HBR can do for your forms.

@IANCESTOR is your friend

@IANCESTOR is your BFF? That I couldn’t say. But it’s going to be your HBR pal from now on because, if you think like Essbase, you can make Essbase only calculate what you want. *This* is hacking Essbase.

Let’s review the way Essbase aggregates a database, per our dear friend, the Database Administrators Guide (DBAG). For the below section we’re going to ignore the Consol database to go along with the DBAG. Just substitute Entity for Product and Segments for Market; they are the first and second consolidating sparse dimensions.

How Essbase aggregates a database

Per the ever-scintillating DBAG, Block Storage Option (BSO) databases calculate dimensions in the following order (we are only going to concern ourselves with sparse, aggregating dimensions), “Sparse dimensions (in the order they display in the database outline)”. See http://download.oracle.com/docs/cd/E10530_01/doc/epm.931/html_esb_dbag/dcacaord.htm and the “Member Calculation Order” section if you need to cite chapter and verse.

Okay, we know the order that Essbase is going to calculate the dimensions. What about the order of the members within the dimensions? This is answered by the “Block Calculation Order” section :

“Essbase calculates blocks in the order in which the blocks are numbered. Essbase takes the first sparse dimension in a database outline as a starting point. It defines the sparse member combinations from this first dimension.”

Using My Very Favorite Essbase Database In The Whole Wide World (MVFEDITWWW), i.e., Sample.Basic, this means that:

“In the Sample Basic database, Product is the first sparse dimension…Product has 19 members…Therefore, the first 19 data blocks in the database are numbered according to the calculation order of members in the Product dimension.”

The DBAG goes on to say:

“The other sparse dimension is Market. The first 19 data blocks contain the first member to be calculated in the Market dimension, which is New York…The next member in the Market dimension is Massachusetts. Essbase creates the next 19 data blocks for sparse combinations of each Product member and Massachusetts.”

This is the important bit:

Essbase continues until blocks have been created for all combinations of sparse dimension members for which at least one data value exists.

Guess what, we have just reviewed how Essbase calculates sparse dimensions from the first sparse dimension to the last one, by dimension and within each dimension. In essence, block by block.

1) Product is aggregated for every level 0 Market member (where data exists in Market – Essbase is smart enough not to calculate combinations that don’t exist).

2) Then Market gets aggregated by every Product (that exists, there’s Essbase being smart again).

Okay, but so what?

If we jump back to the Consol database, you may recall that I claimed you don’t need to calculate all of Entity, do you? Nope. Just the member in the form POV and its ancestors.

You also don’t need to calculate all of Segments. No, sir. Just the Segment that is in the POV and its ancestors.

This is the trick/gimmick/optimization/clever bit/thing you maybe already knew long ago and are now totally disappointed by. Sorry if you’re in the last category – I swear the next post will be better.

How do you do this?

Simple. Let’s assume that the Entity is PA and the Segment is DVD Recorder. Remember, you only need to calculate the relevant branches of the hierarchy. The other level zero/upper level members haven’t changed, so there’s no profit in recalculating them.

Use @IANCESTORS in combination with FIX statements to make Essbase aggregate:

1) The PA ancestor tree for DVD Recorders.

2) The DVD Recorder ancestor tree for the PA ancestor tree.

The results of the above versus that AGG/CALC DIM is exactly the same, only the focused aggregation does it in less than a quarter of the time.

Correction

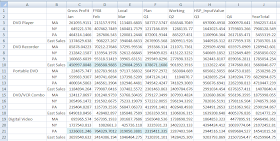

1) Here’s the form with the original data:

2) Let’s round the numbers up and send to Essbase:

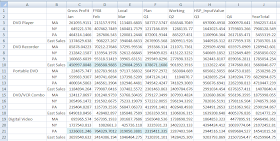

3) Switching to Excel, the blue cells show the data that should be updated. This sheet is easy peasy, as it just shows aggregated dynamic Accounts.

4) And here it is where we expect to see it aggregated one level up, by Entity and Segment.

5) And now let’s look at the when the focused aggregation HBR is run.

Notice that it doesn’t matter if we do or don't aggregate MA, NY, DVD Player, Portable DVD, and DVD/VCR combo. Only PA and DVD Recorders changed, so only their parents need be aggregated. In other words, only aggregate parents whose children's values change, and leave the rest be.

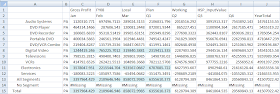

6) Here it is rolled up by Entity.

7) And rolled up by Segment.

The Payoff

What does this mean from a performance perspective (faster=better)?

Looking at the Plansamp.log file, we can see that plain old AGG took almost 7 seconds:

Where the focused aggregation didn’t even make it to 2.5 seconds.

In the Real World

Just yesterday I benchmarked a real Planning HBR with the AGG versus focused aggregation approach. How about 180 seconds versus 24 seconds? Now we’re talking 1/8 of the time. This is powerful medicine.

YMMV; these are the results I got for my client’s Essbase database. Every database is different, so my performance improvement won't necessarily map to your application. Regardless, the above technique can make “big” Planning applications fast and give you the run on save performance your users demand.

See, I love Hyperion Business Rules.

See you next time.